Leveraging Amazon Bedrock for Security Incident Response

Several organizations are looking to leverage GenAI to enhance their cybersecurity posture. These anticipated benefits of GenAI continually increase daily, and one of the most hyped areas is AI-SOC, i.e., applying GenAI to Security Operation Centres (SOC). This is evidenced by the number of AI-SOC startups appearing on the scene and existing cybersecurity vendors introducing look-a-like features.

A similar observation is noticed within security teams: GenAI is integrated into custom implementations to optimize existing workflows. While several online resources offer guidance, many security teams would find it more attractive to pick approaches that provide quick wins.

Ultimately, approaches offering low entry barriers and enhancing productivity would likely be easier to adopt. A key question most teams ask is, how do we start? The choice of approach to adopt hinges on a couple of factors, including:

How deep are teams willing to go down the “GenAI rabbit hole”?

What amount of resources is to be committed?

What is the general level of proficiency in GenAI?

If you want to go for the quick wins, here is a recommendation - take a look at the session - "Generative AI for security in the real world (SEC403)" presented during the recent AWS re: Invent. Here is the YouTube video

NOTE: I posted about this topic on LinkedIn, and the reception was quite impressive. Check out the post here; it includes a downloadable slide deck.

The session has an accompanying workshop here and a GitHub repo

What's in for you?

The presentation provides practical guidelines on leveraging AWS GenAI services to optimize organizational cloud security posture. The approach is divided into three main areas, which loosely map to the three modules that make up the workshop. The same information is described in the GitHub. Each module focuses on a slightly different technique:

Module 1 - Anthropic's Claude 3 summarizes a security hub finding, provides the next steps for remediation, and creates code to resolve the finding.

Module 2 - Provides learnings on how to use function calling (an advanced technique) to expand Claude 3's capabilities to get real-time data.

Module 3 - helps demonstrate what was learned from Module 1 by investigating a finding, providing the following steps, and creating code to resolve the issue.

Improving Incident Response with GenAI

Let's use the steps outlined in the runbook to review Module 1 of the repository. It's pretty awesome stuff. Now, you might ask if the scenario is that of an incident responder. However, remember that Security Hub findings could be fed into systems used for security incident response, including SOC teams. This solution is primarily for a SOC Level 1 Analyst or an entry-level incident responder. However, the approaches could be used to enhance the capabilities of higher proficiency levels.

Persona: Security Incident Responder

Excellent at:

Using threat intelligence.

Tracking Indicators of Compromise (IOCs).

Orchestrating remediation activities.

Low-impact work:

Struggles with understanding diverse query languages.

Difficulty keeping up with query documentation.

Solution: A Natural Language Query Assistant

Powered by GenAI (Amazon Bedrock) to simplify interactions with data sources and create code snippets for remediation.

Expected Outcomes:

Efficiency Gains: Reduces time spent on low-impact work by automating data summarization.

Faster Incident Resolution: Accelerates root cause identification and remediation.

Functional Requirements:

Access to raw datasets for in-depth analysis.

High accuracy and confidence in results.

Ability to handle and adapt to ad-hoc and evolving queries.

Step 1: Get a finding from AWS Security Hub

You should feel free to play with the code in the repo. For example, the EC2 finding did not appear initially because I was not strictly following the workshop instructions. So, I had to create the misconfiguration manually (security group rule allowing open access via port 22).

The code below provides a starting point in the runbook for obtaining the Security Hub finding. The boto3 library retrieves the findings for the Security Hub check EC2.19 (security group rule allowing open access via port 22). Notice in the image below that the resource tag snippet is commented out since I did not strictly follow the workshop steps.

The screenshot below shows the finding reported by Security Hub: for the security group sg-0897277afeecd0a6c, port 22 (for SSH) is reachable by anyone on the internet (0.0.0.0/0).

Step 2: Summarize the finding using Claude 3

In the next steps, the Anthropic Claude model is loaded and thereafter used for several tasks. To do this, the following prompt is sent to the model:

You are an AWS Security Engineer looking to improve the security posture of your organization. Review the finding and summarize actionable next steps.The following response is received from the model:

Based on the provided finding, the actionable next steps are:

1. Review the security group `sg-0897277afeecd0a6c` with the name "default" in the VPC `vpc-0728df22eaeae5c90`. This security group has an inbound rule that allows unrestricted access to port 22 (SSH) from any IP address (0.0.0.0/0).

2. Evaluate the necessity of allowing unrestricted access to port 22 from any IP address. If this level of access is not required, modify the security group rule to restrict access to only trusted IP addresses or ranges.

3. If unrestricted access is required for specific use cases, consider implementing additional security controls, such as: - Enabling AWS Systems Manager Session Manager for secure remote access to EC2 instances. - Implementing bastion hosts or jump boxes to control and monitor access to your resources. - Enabling AWS Security Hub standards to continuously monitor and assess your security posture.

4. Review other security groups in your account and ensure that they do not have unrestricted access to high-risk ports, as mentioned in the finding description.

5. Implement a process to regularly review and audit security group configurations to maintain a secure posture and comply with industry best practices and regulatory standards.

6. Consider automating the remediation process using AWS Config Remediation or AWS Systems Manager Automation documents to ensure consistent and timely remediation of security group misconfigurations. By following these steps, you can address the identified security issue, enhance the security posture of your AWS environment, and align with industry best practices and regulatory standards.

Step 3: Use Claude 3 to Generate Python Code used for Finding Discovery

This step is essentially aimed at validating the finding in the previous step to check for things like false positives and proactively prevent taking steps that might be counter-productive. I also liked the validation steps :

Check if the SG is attached to an ENI.

Check if the remediation code generated by GenAI correctly fixes the issue.

It would also be nice for AI-SOC products to have measures similar to automatic or manual checks. For this step, a code snippet is generated by Claude to check if the security group with the finding is used by an ENI. The runbook has more validation steps to ensure the results are accurate.

Step 4: Generation of Remediation Code

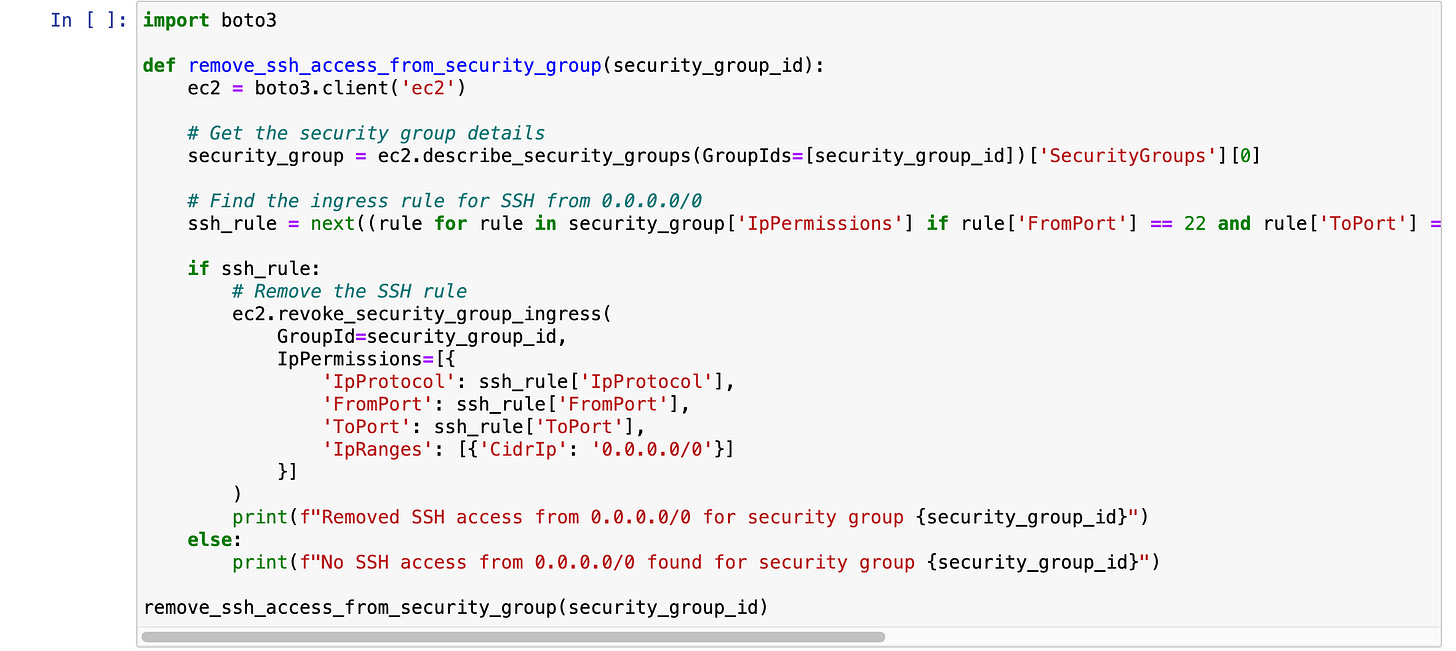

In this last step, a Python code snippet for remediating the Security Hub finding is generated by Claude via Bedrock. The Python code removes SSH access by deleting the Security Group rule that exposed port 22 to the internet.

I also asked it to generate Terraform code, which I did not test for accuracy.

resource "aws_security_group_rule" "remove_ssh_access" {

security_group_id = "sg-8d7718ea"

type = "ingress"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

description = "Remove SSH access from any IP"

lifecycle {

create_before_destroy = true

}

}

resource "aws_security_group_rule" "remove_ssh_access_ipv6" {

security_group_id = "sg-8d7718ea"

type = "ingress"

from_port = 22

to_port = 22

protocol = "tcp"

ipv6_cidr_blocks = ["::/0"]

description = "Remove SSH access from any IPv6 address"

lifecycle {

create_before_destroy = true

}

}

Conclusion

In this short post, we went through the runbook provided by AWS for leveraging GenAI to respond to a security finding reported on AWS Security Hub. Though the use case in this runbook is simple and fits the Level 1 SOC analyst role, the approach could be adapted to fit more complex security operations tasks. It is interesting to see how Claude's model, provided on Amazon Bedrock, can be used for security tasks, e.g., summarization and generation of code snippets for validation and remediation.

Some food for thought:

Would you use this as a basis for other fun stuff in your SOC?

What could be the demerits/challenges of using this solution?

What other security presentations resonated with your challenges during

AWS re: Invent 2024 so that you have it on your roadmap for 2025?